TikTok's Algorithm Crisis, EU's Minor Protection Push, and SeaWorld's $100M Hidden Fee Reckoning

Welcome to Fair Monday: your weekly briefing on dark patterns, digital consumer protection, and the fight for ethical design. Every Monday, we deliver the latest developments in regulatory enforcement, class action lawsuits, and industry accountability, tracking how major platforms from Amazon to Meta are being held responsible for deceptive practices that manipulate user behavior, exploit consumer trust, and undermine digital rights. Whether you're a legal professional, UX designer, compliance officer, or simply a consumer who wants to understand how digital deception works, Fair Monday provides the insights, case analysis, and precedent-setting developments you need to navigate the evolving landscape of digital fairness.

This week's Fair Monday centers on protecting minors from digital manipulation.

Our news for this week:

TikTok algorithm violations: New evidence of DSA non-compliance and dark pattern risks

Amnesty International's October 2025 research reveals critical DSA compliance failures at TikTok, exposing how engagement-driven algorithms systematically harm minors. The investigation documented TikTok's recommender system directing 13-year-old accounts toward harmful content within 3-4 hours; a clear violation of child safety requirements under the Digital Services Act.

The European Commission's ongoing investigation (February 2024) examines violations of DSA Articles 28(1), 34(1), 34(2), and 35(1), focusing on inadequate risk assessments and safety by design failures for minors. This precedent-setting case exposes how engagement-driven algorithms create systemic risks, with potential penalties up to 6% of global revenue for platforms failing compliance obligations.

Key compliance risks identified:

- Addictive design patterns: Infinite scroll, autoplay, and engagement-based recommendations prioritize platform metrics over user wellbeing

- Failed content moderation: Harmful material remains online despite user reports and algorithmic amplification of dangerous content

- Inadequate risk mitigation: TikTok's stated safety measures proven ineffective through technical testing

The European Commission investigation (launched February 2024) examines potential DSA violations including failure to conduct adequate risk assessments and implement proportionate mitigation measures for systemic risks to minors.

For digital platforms, this case demonstrates regulatory scrutiny of algorithm transparency, content recommendation systems, and safety by design obligations. Companies must audit recommender systems for harmful amplification effects and document effective compliance measures or face penalties up to 6% of global revenue.

EU Parliament just raised the stakes for platform compliance

The European Parliament's October 16, 2025 report on minor protection online establishes binding recommendations for DSA enforcement and upcoming Digital Fairness Act provisions, directly targeting addictive design features and dark patterns that exploit children's behavioral vulnerabilities.

Prohibited design features (default for minors):

- Engagement-based recommender algorithms that maximize time-on-platform

- Infinite scrolling and continuous content feeds

- Autoplay functionality eliminating natural stopping points

- Loot boxes and gambling-like game mechanisms

- Dark patterns including manipulative UI/UX elements

- Targeted advertising to children under 16

New compliance obligations:

- Mandatory safety-by-design requiring proactive risk mitigation during product development

- Age verification systems: EU-wide minimum age of 16 for social media access (13 with parental consent)

- Personal liability for senior management in cases of persistent violations

- Non-personalized alternatives to profiling-based recommendation systems

MEPs explicitly called for platforms to disable "manipulative features like infinite scrolling, autoplay, disappearing stories, and harmful gamification practices" that "deliberately exploit minors' behaviour to boost engagement."

Regulatory convergence: The TikTok DSA investigation (February 2024) examines "behavioral addictions" and "rabbit hole effects" from these same features. Parliament's vote (November 24-27, 2025) will formalize legislative requirements, while the Digital Fairness Act (expected 2026) will establish EU-wide dark pattern prohibitions.

Colorado takes bold step on digital child safety

On October 8, 2025, Colorado adopted groundbreaking amendments to the Colorado Privacy Act (CPA), establishing new standards for protecting minors from manipulative digital design patterns without requiring mandatory age verification systems.

The updated regulations redefine how companies demonstrate knowledge of a user's minor status, including through targeted marketing, credible age information, or intentional service direction toward minors. This expanded definition creates new compliance obligations for digital platforms operating in Colorado.

The amendments specifically target system design features that increase engagement, manipulate decision-making, or undermine minors' autonomy. Covered features include those primarily designed to prolong platform usage or shown to cause harm through increased engagement. However, smart exemptions protect features requested by users, necessary for core functionality, or designed to enhance safety through parental controls and time limits.

Significantly, the rules recognize minor-initiated feature activation as valid consent, providing clarity for product teams designing age-appropriate experiences.

Taking effect 30 days after Attorney General approval (expected before year-end 2025), these amendments position Colorado as a leader in regulating dark patterns and engagement-driven design features. Companies must now assess their digital interfaces for compliance with these stricter minor protection standards.

What this means for your compliance team: The AG will assess intent and willful disregard; making pattern detection and proactive compliance essential, not optional.

As regulations evolve, dark pattern detection isn't just about avoiding penalties. It's about building trust and sustainable growth.

SeaWorld dark pattern class action: How hidden fees and deceptive design cost companies millions

The October 2025 class action against United Parks & Resorts over hidden ticket fees marks a turning point: dark patterns are no longer just a UX ethics issue. They're a competition law violation with existential business consequences.

The Dark Pattern Playbook They Used

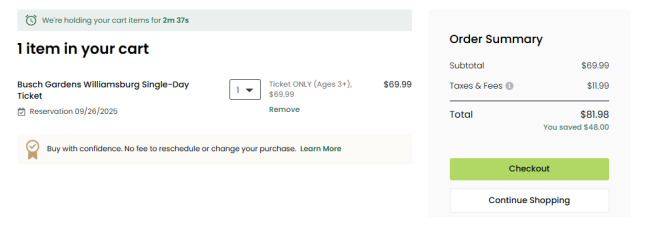

The company deployed textbook manipulative design: drip pricing (hiding $11.99 fees until final checkout), false urgency tactics (countdown timers pressuring completion), misleading terminology ("Taxes & Fees" labels on non-tax charges), and multi-stage commitment escalation (requiring personal data entry before fee disclosure).

Research shows why companies use these tactics: drip pricing increases consumer spending by 21%, and countdown timers boost conversion rates significantly. In the short term, it works brilliantly.

Why It Destroys Value Long-Term

Virginia's lawsuit seeks $500-$1,000 per violation. With hundreds of thousands of ticket sales, United Parks faces potential damages exceeding $100 million—far exceeding any revenue gains from hidden fees.

Beyond financial exposure, dark patterns create three fatal business risks: regulatory expansion (15+ states now banning hidden fees), reputation destruction (consumers share manipulation experiences), and competitive disadvantage (transparent competitors gain trust premium as awareness grows).

The companies winning in 2025 and beyond recognize a fundamental truth: customer manipulation might boost quarterly metrics, but customer trust builds lasting businesses. Every dark pattern is borrowed time on a ticking regulatory bomb.

References

- https://www.amnesty.org/en/documents/POL40/0360/2025/en/

- https://www.europarl.europa.eu/news/de/press-room/20251013IPR30892/new-eu-measures-needed-to-make-online-services-safer-for-minors#:~:text=Age%20assurance%20and%20minimums&text=The%20MEPs%20propose%20an%20EU,to%20access%20any%20social%20media

- https://www.documentcloud.org/documents/26185577-seaworld/