When AI Writes Fake Reviews: The Singapore Case That Sparked a Crackdown

Generative AI is reshaping how we design, from visual branding to entire user interfaces. One recent Industry analysis found that 83% of creatives and designers have already integrated AI into their daily workflows. OpenAI’s own research even suggests that web and interface designers are “100% exposed” to automation by tools like ChatGPT.

But as these systems evolve, so do their risks. The same technology that helps us build trust can also be used to imitate it, and quietly distort it.

Imagine reading a glowing review that convinces you to book a product online or service. Now imagine it was written by AI, using someone else’s name and without their consent.

That’s exactly what happened in Singapore, where a business used ChatGPT to post fake 5-star reviews under real customer identities. The Competition and Consumer Commission of Singapore (CCCS) launched an enforcement action, calling it a case of AI-assisted deception.

The Case: Fake Reviews, Real Harm:

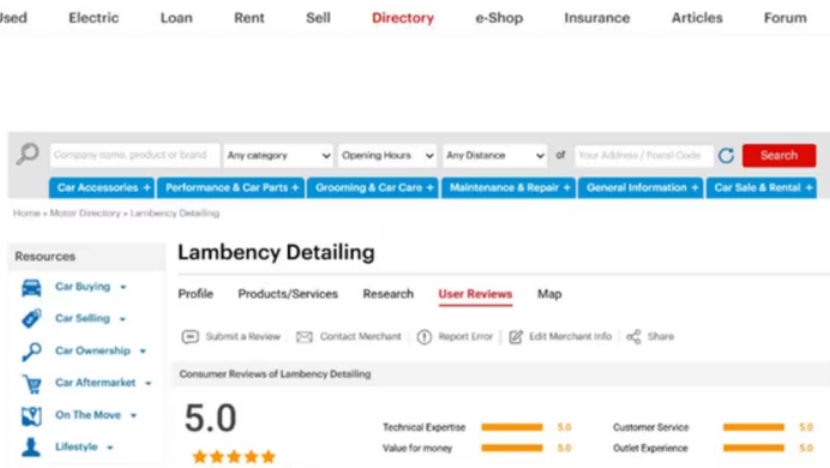

Over a period of two years, Lambency Detailing posted dozens of fake 5-star reviews on its business page on Sgcarmart.com, a leading platform for car listings and services in Singapore.

But these weren’t just any fake reviews.

Quantum Globe used ChatGPT to generate customized review content that mimicked real customer feedback, detailing services rendered, praising quality, and using informal, human-sounding language. Then, they posted the reviews using real customer identities: names, license plate numbers, and even car photos, all without consent.

The reviews were submitted through a QR-based feedback system offered by Scarmart. Critically, this system allowed anyone to leave a review without logging in or verifying their identity, making it easy for the company to impersonate customers.

The deception unraveled in January 2025, when a customer spotted a review under her name that she never wrote. CCCS launched an investigation and discovered eight confirmed cases of identity misuse.

CCCS deemed the practice an unfair trade practice under Singapore’s consumer protection laws. In a formal statement, the agency highlighted the dual harm: consumers were misled by fabricated social proof, and honest competitors were disadvantaged.

In response, Quantum Globe admitted to the misconduct and provided a legally binding undertaking to CCCS. The company agreed to:

- Immediately cease posting fake reviews

- Set up a 6-month feedback channel for reporting fake reviews

- Notify affected customers whose data had been misused

- Publish corrective notices across Sgcarmart and all marketing platforms

- Remove fake reviews within eight working days of verification

- Cooperate with CCCS on monitoring ongoing compliance

The company’s director also gave a personal undertaking not to engage in unfair practices under any business he operates. Meanwhile, SGCM Pte. Ltd, which runs Scarmart, committed to exploring stronger safeguards, such as SMS or email verification, to prevent future misuse.

AI Is a Tool; But Who Decides How It’s Used?

The technology at the center of this case, ChatGPT, wasn't misused because it was malicious. It was misused because there were no checks on how it was applied.

This aligns with research by Veronika Krauss and her team that found that large language models like ChatGPT can generate fake reviews, urgency cues, and manipulative patterns even from neutral prompts, without ethical safeguards.

And the consequences are real, users exposed to dark patterns show 50% less trust in brands (Voigt, Schlögl and Groth, 2021).

When AI learns from data filled with dark patterns, it tends to repeat them. And without human oversight, manipulation doesn’t just scale, it becomes normalized.

Before using AI, ask yourself: does this build real trust, or just the appearance of it?

A Wider Shift in Enforcement:

Nearly 30% of online reviews are fake and the Singapore case isn’t isolated, it’s part of a growing global crackdown on digital Deception.

Globally, regulators are taking note. In the EU, fake reviews and dark patterns are prohibited under a web of laws: the Digital Services Act, Digital Markets Act, Unfair Commercial Practices Directive, GDPR, and the upcoming AI Act. In the U.S., these practices fall under Section 5 of the FTC Act. Under the DSA, violators can now face fines of up to 6% of global revenue.

Fake reviews cost businesses over $150 billion a year in lost revenue in the U.S and a reminder that manipulation isn’t just unethical, it’s expensive.

In Singapore the CCCS has joined this momentum and the message is clear: AI-powered deception won’t be treated as innovation. It’s a violation.

Designing for Integrity: FairPatterns’ Role

At FairPatterns, we help platforms and product teams detect and fix digital design violations, including fake reviews and dark patterns, before they lead to consumer harm or regulatory risk.

Our AI tool:

- Detect manipulative patterns 100x faster than manual audits

- Fix them with tested, compliant designs that are easy to implement

- Prevent future risks with proactive design guidance for operational teams

From detection to redesign, we help companies design for clarity, trust, and compliance, not deception.

Trust Can’t Be Faked:

A 5-star review should mean quality not deception. But when businesses use AI to fake trust, the harm goes beyond one platform. It damages user confidence, punishes honest competitors, and invites regulatory scrutiny.

It’s time to design digital spaces where trust is earned,not copied.

Let’s build with integrity, and keep our reviews real : https://www.fairpatterns.com/contact-us

💫 Regain your freedom online.

References:

Shades of Intelligence : 83 % of creatives are already using machine learning tools – is now the time to get on side with AI ? (2023, 15 novembre).

Eloundou, T., Manning, S., Mishkin, P., & Rock, D. (2023, 17 mars). GPTs are GPTs : An Early Look at the Labor Market Impact Potential of Large Language Models. arXiv.org. https://arxiv.org/abs/2303.10130\

Krauß, V., McGill, M., Kosch, T., Thiel, Y., Schön, D., & Gugenheimer, J. (2024, 5 novembre). « Create a Fear of Missing Out » -- ChatGPT Implements Unsolicited Deceptive Designs in Generated Websites Without Warning. arXiv.org. https://arxiv.org/abs/2411.03108

Vaghasiya, K. (2025, 20 mai). 15 Fake Review Statistics You Can& # 8217 ; t Ignore (2025). Best Social Proof & FOMO App For Your Website | WiserNotify. https://wisernotify.com/blog/fake-review-stats/

Action Taken Against Lambency Detailing for AI-Generated Fake Reviews on Sgcarmart.com. (s. d.). Competition And Consumer Commission Of Singapore. https://www.cccs.gov.sg/media-and-events/newsroom/announcements-and-media-releases/action-taken-against-lambency-detailing-for-ai-generated-fake-reviews-on-sgcarmart-com